OpenAI 'Unsatisfied' With Some Nvidia Chips, Has Been Looking For Alternatives For Months: Report

Trouble in paradise between Nvidia and OpenAI has intensified, as a new report from Reuters reveals that the ChatGPT maker has been 'unsatisfied' with Nvidia's chips, and has been seeking alternatives since last year.

The news comes on the heels of word that Nvidia's plans to invest $100 billion into OpenAI were stalled - days after The Information reported that the chipmaker had been in talks to invest $60B more in OpenAI along with Microsoft and Amazon.

NVidia had announced an agreement with OpenAI in September to build at least 10 gigawatts of computing power for OpenAI, along with an investment of up to $100 billion - however CEO Jensen Huang told industry associates late last year that the $100 billion was not binding and not finalized. He also slammed OpenAI's lack of discipline regarding their business strategy, while expressing concerns over competitors such as Google and Anthropic, the WSJ reported.

Huang denied the report over the weekend - calling it "nonsense," but reiterated that the investment wouldn't exceed $100 billion.

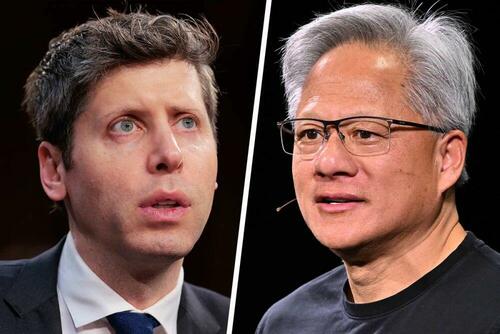

"We are going to make a huge investment in OpenAI. I believe in OpenAI, the work that they do is incredible, they are one of the most consequential companies of our time, and I really love working with Sam," he said, referring to OpenAI CEO Sam Altman.

"Sam is closing the round (of investment), and we will absolutely be involved,” Huang added in comments to Bloomberg. "We will invest a great deal of money, probably the largest investment we’ve ever made."

The $100B deal was expected to close within weeks, however negotiations have been dragging on for months as OpenAI has struck deals with AMD and others for GPUs.

What could possibly be the source of the confusion…?pic.twitter.com/xzXFpEeyAc

— Compound248 💰 (@compound248) February 2, 2026

Yet, now it's OpenAI who's too cool for Nvidia - apparently.

The ChatGPT-maker’s shift in strategy, the details of which are first reported here, is over an increasing emphasis on chips used to perform specific elements of AI inference, the process when an AI model such as the one that powers the ChatGPT app responds to customer queries and requests. Nvidia remains dominant in chips for training large AI models, while inference has become a new front in the competition.

This decision by OpenAI and others to seek out alternatives in the inference chip market marks a significant test of Nvidia’s AI dominance and comes as the two companies are in investment talks. -Reuters

According to a person familiar with the matter, OpenAI's 'shifting roadmap' changed the its computational resource needs and 'bogged down talks with Nvidia,' Reuters continues. Meanwhile, OpenAI has been in discussions with various startups, including Cerebras and Grpq to provide chips for faster inference - according to two sources. Yet Nvidia struck a $20 billion licensing deal with Groq that ended OpenAI's talks. In a statement, Nvidia said that Groq's IP was highly complimentary to Nvidia's product roadmap.

Alternatives to Nvidia

OpenAI has been using Nvidia GPUs because their core design is well suited to crunching massive datasets required to train large AI models like ChatGPT. For a long time, Nvidia was the only game in town, however AI advancements have been increasingly focused on using trained models for inference and reasoning, which 'could be a new, bigger stage of AI,' per Reuters.

The ChatGPT-maker’s search for GPU alternatives since last year focused on companies building chips with large amounts of memory embedded in the same piece of silicon as the rest of the chip, called SRAM. Squishing as much costly SRAM as possible onto each chip can offer speed advantages for chatbots and other AI systems as they crunch requests from millions of users.

Inference requires more memory than training because the chip needs to spend relatively more time fetching data from memory than performing mathematical operations. Nvidia and AMD GPU technology relies on external memory, which adds processing time and slows how quickly users can interact with a chatbot.

Inside OpenAI, the issue became particularly visible in Codex, its product for creating computer code, which the company has been aggressively marketing, one of the sources added. OpenAI staff attributed some of Codex’s weakness to Nvidia’s GPU-based hardware, one source said.

According to the report, Altman held a Jan. 30 call with reporters where he said that OpenAI's coding models will "put a big premium on speed for coding work."

To that end, OpenAI on Monday announced a new Mac app dedicated to working with its Codex AI coding agent, which is intended to be a 'command center' for directing other coding agents, as well as managing coding agents across multiple projects and tasks that run for extended periods of time.

h/t Capital.news